A new program from the University of Chicago, Glaze, will provide protection for art from AI theft by confusing it.

Glaze, which comes from a team of people from the University of Chicago, led by Neubauer Professor Ben Zhao, is a tool that will help protect online artwork from current iterations of AI models. Glaze works by ‘confusing’ AI model’s pattern recognition. It applies what the team refers to as a “cloak” that rearranges small inputs in the artwork into specific patterns, making certain AI of the current generation unable to reproduce it accurately.

How Glaze works

Glaze performs this feat by using a concept called adversarial examples. The Glaze Project – University of Chicago website explains the topic further, “the Achilles’ heel for AI models has been a phenomenon called adversarial examples– small tweaks in inputs that can produce massive differences in how AI models classify the input. Adversarial examples have been recognized since 2014 (here‘s one of the first papers on the topic), and numerous papers have attempted to prevent these adversarial examples since.”

User concerns with Glaze

Currently, one of the main topics of concern from users is that their art sometimes picks up additional artifacts when being compressed, dependent on the style of their art. Zhao’s response to this was that the project is still in early development, meaning updates will be made, “We still need to fix some basic things and then will look at better ways to minimize visual impact.”

When asked if AI models may eventually evolve past the point of programs like Glaze’s security, the website said, “It’s certainly possible, but we expect that would require significant changes in the underlying architecture of AI models. Until then, cloaking works precisely because of fundamental weaknesses in how AI models are designed today.”

Glaze’s release

The release of Glaze was done in collaboration with Puerto Rican artist Karla Ortiz, who created the oil painting ‘Musa Victoriosa’ to release alongside the program, as the first artwork to use its “cloak.” Ortiz is part of a trio of artists currently involved in a lawsuit in which they are suing Stability AI (Stable Diffusion) and Midjourney (Midjourney).

The three artists- Sarah Andersen, Kelly McKernan, and Karla Ortiz, are alleging that these companies have infringed on the rights of “millions of artists” by training their AI on the data of five billion images scraped from the web, “without the consent of the original artists.”

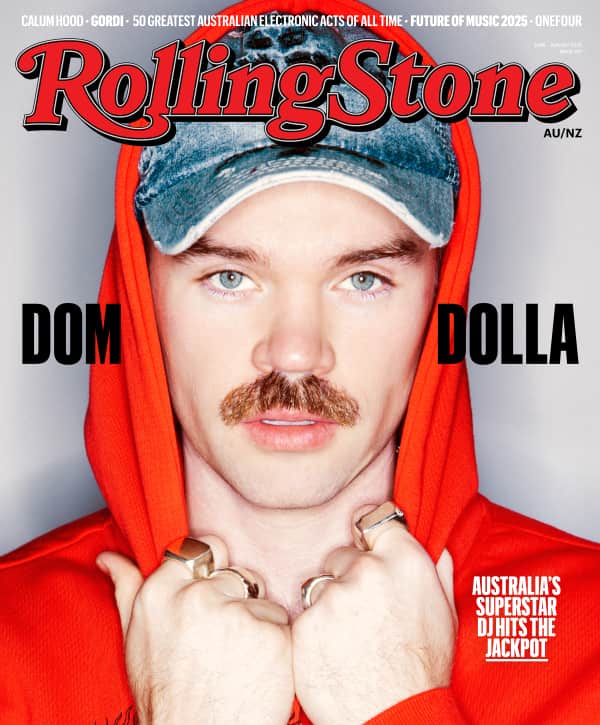

Love Music?

Get your daily dose of everything happening in Australian/New Zealand music and globally.

“1/ This might be the most important oil painting I’ve made:

Musa Victoriosa

The first painting released to the world that utilizes Glaze, a protective tech against unethical AI/ML models, developed by the @UChicago team led by @ravenben. App out now

glaze.cs.uchicago.edu/download.html”

1/ This might be the most important oil painting I’ve made:

Musa Victoriosa

The first painting released to the world that utilizes Glaze, a protective tech against unethical AI/ML models, developed by the @UChicago team led by @ravenben. App out now 👇 https://t.co/cNIXNDHMBy pic.twitter.com/Y1MqVK7yvZ

— Karla Ortiz (@kortizart) March 15, 2023

“2/ This painting is a love letter to the efforts of this incredible research team and to the amazing artist community. This transformational tech takes the first of many steps, to help us reclaim our agency on the web, by making our work not be so easily exploited. Detail shots:”

2/ This painting is a love letter to the efforts of this incredible research team and to the amazing artist community. This transformational tech takes the first of many steps, to help us reclaim our agency on the web, by making our work not be so easily exploited. Detail shots: pic.twitter.com/VwFMdn52Jo

— Karla Ortiz (@kortizart) March 15, 2023

“3/ So how does Glaze work?

For a more in-depth description visit here: glaze.cs.uchicago.edu/index.html#wha”

3/ So how does Glaze work? @ravenben describes:

“Glaze analyzes your art, and generates a modified version (with barely visible changes). This "cloaked" image disrupts AI mimicry process.” Quote tweet below.For a more in-depth description visit here:https://t.co/dnbOdpLpTt https://t.co/Pj3XTb2iZL

— Karla Ortiz (@kortizart) March 15, 2023

“4/Best part about Glaze? It’s free for everyone and the researchers will be working hard to bring even more updates to this badass tool! So my painting can look even closer to the source amongst other features. So share far and wide!

App link again: glaze.cs.uchicago.edu/download.html”

4/Best part about Glaze? It’s free for everyone and the researchers will be working hard to bring even more updates to this badass tool! So my painting can look even closer to the source amongst other features. So share far and wide!

App link again:https://t.co/cNIXNDHMBy pic.twitter.com/2M7JuV1C8T

— Karla Ortiz (@kortizart) March 15, 2023

Other artists have also commented on the advent of Glaze. Although to be clear, the program doesn’t prevent art from being passed into an AI’s dataset, it merely works as a first step in the prevention of AI theft by stopping AI models from replicating your style within your images.

“This is a big deal. People have worked to develop a way to essentially block #AIart scrapers from taking your work if you run it through this program.

I haven’t tested it myself, and this is just the BETA, But I trust people like @kortizart who put it through it’s paces.”

This is a big deal. People have worked to develop a way to essentially block #AIart scrapers from taking your work if you run it through this program.

I haven't tested it myself, and this is just the BETA, But I trust people like @kortizart who put it through it's paces. https://t.co/6BgwaTbqX0

— Nate Horsfall (@Lightning_Arts) March 16, 2023

Project lead, Ben Zhao, also took to Twitter to explain the program and its utility further.

“Just to expand a wee bit more. Glaze’s cloaking effect is not like a watermark or hidden signal. It studies the AI art models representation of “artistic style,” then disrupts it in that dimension. Kinda like changing the ultrasonic melody of a sound for dogs (if models=dogs).”

Just to expand a wee bit more. Glaze's cloaking effect is not like a watermark or hidden signal. It studies the AI art models representation of "artistic style," then disrupts it in that dimension. Kinda like changing the ultrasonic melody of a sound for dogs (if models=dogs).

— Ben Zhao (@ravenben) March 16, 2023

“Mea culpa. We released Glaze using a front end user interface that reused significant portions of code from DiffusionBee (GPL license). A careless mistake we are now rectifying. We are releasing the source code for Glaze front end, and also working on a rewrite of the frontend.”

Mea culpa. We released Glaze using a front end user interface that reused significant portions of code from DiffusionBee (GPL license). A careless mistake we are now rectifying. We are releasing the source code for Glaze front end, and also working on a rewrite of the frontend. https://t.co/Un3hX33Bbr

— Ben Zhao (@ravenben) March 16, 2023

Finding out more about Glaze and where to install the program

Glaze is available to download for free here. Read more about who owns the rights to Glaze here in Glaze’s own user agreement.